The Future of Machine Vision Imaging Systems

Beginning with the AIS's camera link standard, the industrial camera portion of the imaging system was standardized.

PC or embedded processor machine vision systems are largely component based. Even if the lens is motorized for control, it relies on a separate PC card for motor drive and position feedback. Each component has its own control that isn’t necessarily integrated with the other components.

The world of machine vision imaging is largely performance driven. We’re looking for features on a part, finding edges, gaging, etc. The need for performance coupled with cost pressures leads to component-based imaging systems. While modular components can help bring the best performance/cost ratio, they also can leave users short of the fully integrated experience that they’ve come to expect from consumer imaging devices.

By contrast, the world of general consumer imaging devices is driven by features, functions, and ease of use. A good or better image does count, but image quality is largely judged by the human eye on the whole scene. At the image level, machine vision is a higher subset and consumer level devices don’t often drive specification. However, as a complete imaging device or imaging system, the prevalence of security and various forms of web cameras have begun to influence expectations.

In higher end security and web cameras, the camera, zoom optics and lighting are all part of one integrated imaging system. The user has full control of everything important to the image, including the camera, lens, and lighting through a single common interface. The camera, lens, and lighting sub-systems are interoperable between each other. Users can manually focus, control the aperture setting, zoom if equipped, adjust camera settings like shutter speed, and set the light intensity. If the device is web enabled, the user can use the control interface remotely from any internet access point via internet of things (IoT) technology.

In typical machine vision systems, cameras, optics, and lighting are separate items, each with their own performance criteria. Cameras are largely C-mount to work with a wide variety of threaded optics. These lenses are usually manually controlled. Lighting is a separate system. If there is any communication between the components, it’s vendor specific and proprietary. Despite the fact that both the camera and lighting system may have a network connection for control, a separate I/O cables must be run to distribute trigger signals if any synchronization or strobe timing is required between the camera and lighting. Even if the optics are motorized for remote control, a lens control card is required to drive the lens and sense the position for any motorized axis. This now has its own software and operational environment. The functionality begins to approach the ideal concept, but leaves the user far short of the fully integrated experience that is envisioned.

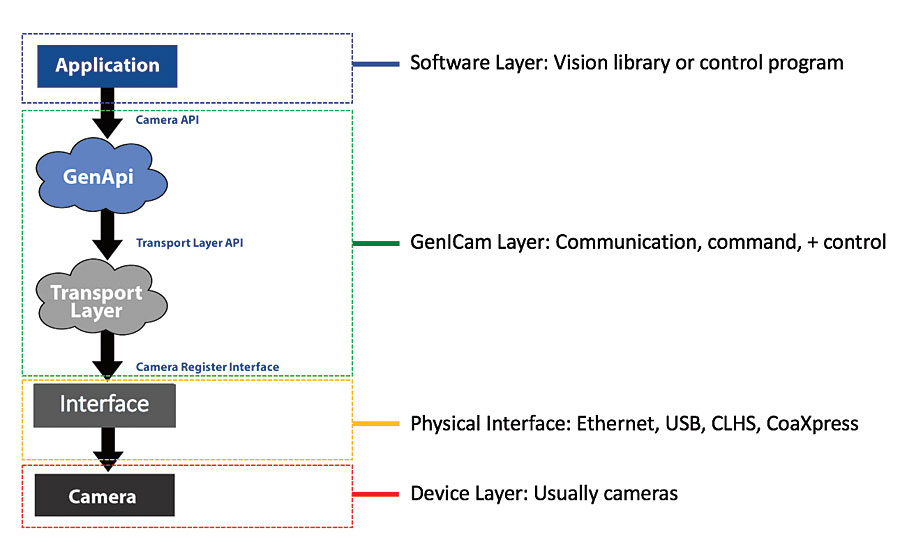

Layers for accessing a GenICam camera

Beginning with the AIA’s Camera Link standard and followed by GigE Vision, USB Vision, Camera Link HS, and JIIA’s CoaXpress standards, the industrial camera portion of the imaging system has been standardized. Each of these standards has a different physical interface, each offering their own benefits in cabling distance, bandwidth, signaling support, and real time performance. Behind the physical interfaces, the EMVA’s GenICam standard provides standardized communication, command, and control across the physical interface. GenICam gives the user a single, common application programming interface (API) for this complete solution from the device to the top-level application software for all recognized devices. Today, all the mentioned camera standards use GenICam, but it can be applied across any physical interface. This includes serial and custom interfaces in embedded hardware.

This standardization of the camera interface doesn’t necessarily extend to lens and lighting components. While some work has been done to add lighting to the GenICam standard, support for the lighting components is strictly optional and not universally supported in the market.

Additionally, the AIA and JIIA interface standards mentioned are just that—interface standards. They are concerned with bandwidth and the transfer of information between the device and host, but lack a system concept that defines any joint commands or interoperation between devices. This still leaves users short of the ideal vision of seamless operation between the camera, lighting and lens systems that we envisioned based on the higher end security and web cameras.

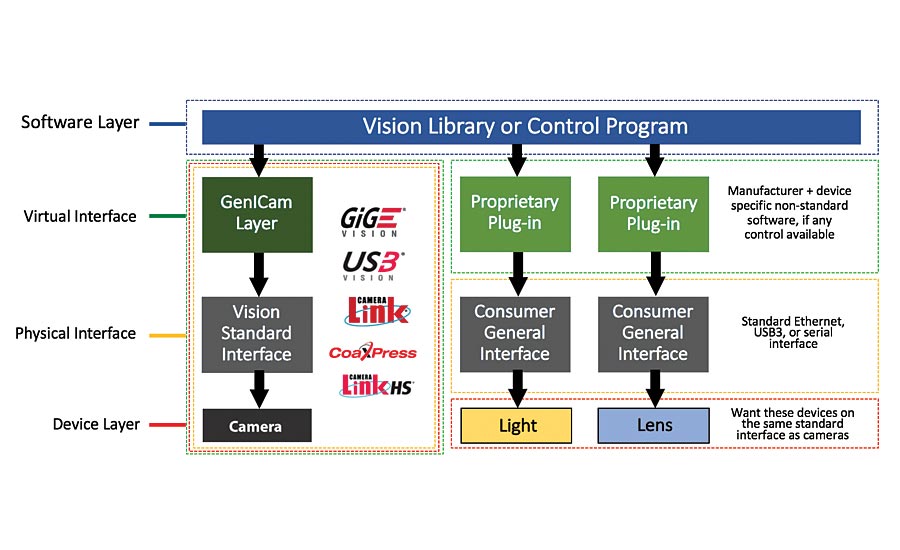

Diagram 1: A typical machine vision system today. The camera is standardized, but lens and lighting systems are proprietary over a general interface and treated as third party plug-ins by the vision system software.

In most machine vision systems today, the implementation of cameras, lighting and lens systems is as shown in Diagram 1. The camera is implemented in a standardized way via GenICam and one of the physical AIA or JIIA standards mentioned. If there are controllers for lighting or lenses implemented at all, they likely are using the consumer standard version of the gigabit ethernet or USB interfaces and present themselves to the host software as third party custom plug-ins; not a common API for all three device types.

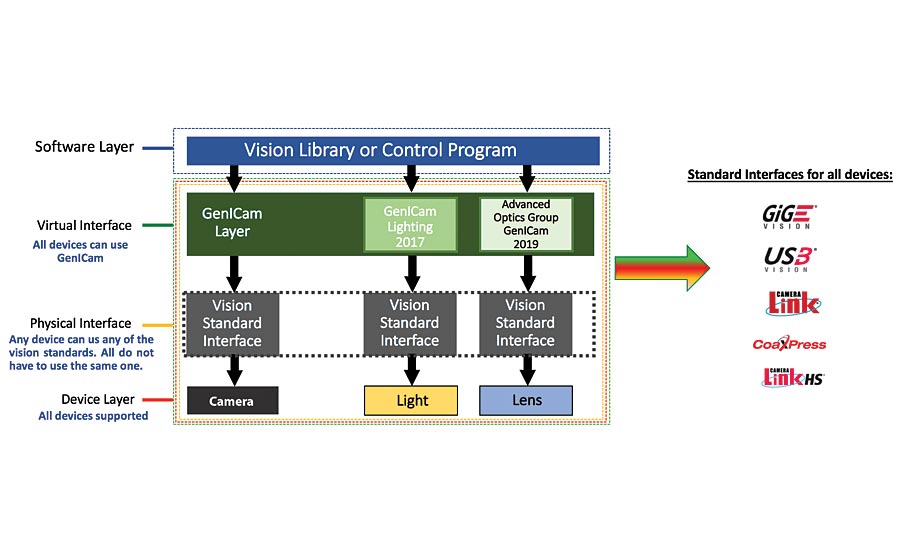

This is beginning to change though. Activities in the AIA, EMVA, and JIIA standards committees mean that much more is possible today. In 2017, a group headed by Gardasoft, CCS Inc., and Smart Vision Lights successfully incorporated the beginnings of lighting into the GenICam standard. In early 2019, a group of manufacturers formed the Advanced Optics Group and approached the GenICam committee to begin work to add optics.

When completed, this work will allow GenICam to be a common layer between cameras, lenses, and lighting and allow these devices to operate on any of the AIA or JIIA standard physical interfaces; Camera Link, GigE Vision, USB Vision, CoaXpress, or Camera Link HS. This is a promising development and putting us closer to the ideal vision—Diagram 2.

As we discuss the ideal vision of integrated control across and the plug-n-play expectation of potential users, it is necessary to also note aspects of power and timing. All of the interfaces discussed here offer power over the interface, including USB3 and ethernet (PoE). Lenses and LED lights of significant size can now be reliably powered by a single cable via the network connection.

Add to that, IEEE 1588 precision time protocol (PTP) for ethernet devices. IEEE 1588 PTP allows ethernet devices on a network to share timing off the common system clock to automatically synchronize events. GenICam and GigE Vision standards supports IEEE 1588 PTP, but only optionally. USB Vision and the USB3 interface do not have an equivalent to this yet. However, it may be technically possible and could be developed. Camera Link, Camera Link HS, and CoaXpress all are real-time or low latency enough to allow signaling between devices across the network. Users hate I/O cables and trigger problems between devices. This could be coming to an end.

The benefits of this consolidation of function and expansion of GenICam may also help meet expectations in other ways. If we also consider the industrial internet of things (IIoT) and smart cloud connectivity demands from system builders and their users, then GenICam may also be seen as a potential enabler for IIoT and smart cloud connectivity. IIoT itself is about the exchange and reporting of device information. There are many devices, data types, and ways that this data may be packaged and reported to the IIoT layer from the device or system. GenICam standardizes the device communications via a sub-component called the standard feature naming convention (SFNC). The SFNC standardizes a name (label), control register, valid range for each function in a device. It may be the perfect layer to wrapper these standard responses and report them to the IIoT layer.

Diagram 2: We are on the verge of offering the ideal “fully integrated” vision experience. Users can have the performance and flexibility of component-based systems, while still benefitting from seamless control across standard vision interfaces.

Bringing this all together, today all of the necessary pieces exist to make the ideal vision of plug-n-play component-based machine vision systems a reality. For the first time, users can envision buying USB or ethernet cameras, lighting and lenses, plug them into powered hubs, have them automatically synchronize over the physical interface, and seamlessly control them from a single standardized API or GUI. Envision high performance imaging components being controlled as if they were fully integrated security or web cameras. These fully integrated machine vision systems may even become smart systems in an IIoT network with smart cloud connectivity.

If this ideal vision for machine vision systems is possible, why doesn’t it exist with a standardized answer today? The answer lies in the fact that the current AIA and JIIA interface standards lack a system level approach and many of the required elements to implement the full functionality described here remain optional. To truly implement this ideal vision would mean making all of the elements described here mandatory for all devices and software. To be implemented in the current interface standards, each would have to be expanded to require all the necessary elements. Alternatively, a new system level standard could be proposed to bring all the required elements together and define any missing pieces. It isn’t clear exactly how this should come together. However, looking at the available pieces and the gap to user expectations for their vision system, it is harder to imagine a future where this ideal vision system doesn’t exist. V&S

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!